PhD Defense

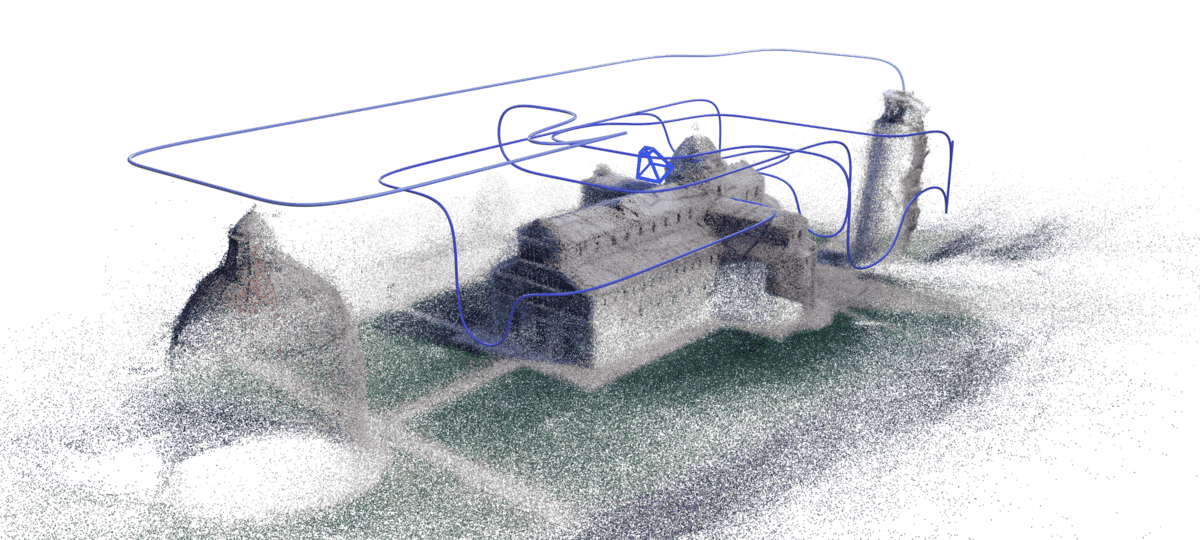

Exploring and Rebuilding Reality: Learning 3D Reconstruction from Capturing Images to Building Photorealistic Representations

Antoine Guédon

IMAGINE /

LIGM

École des Ponts ParisTech (ENPC)